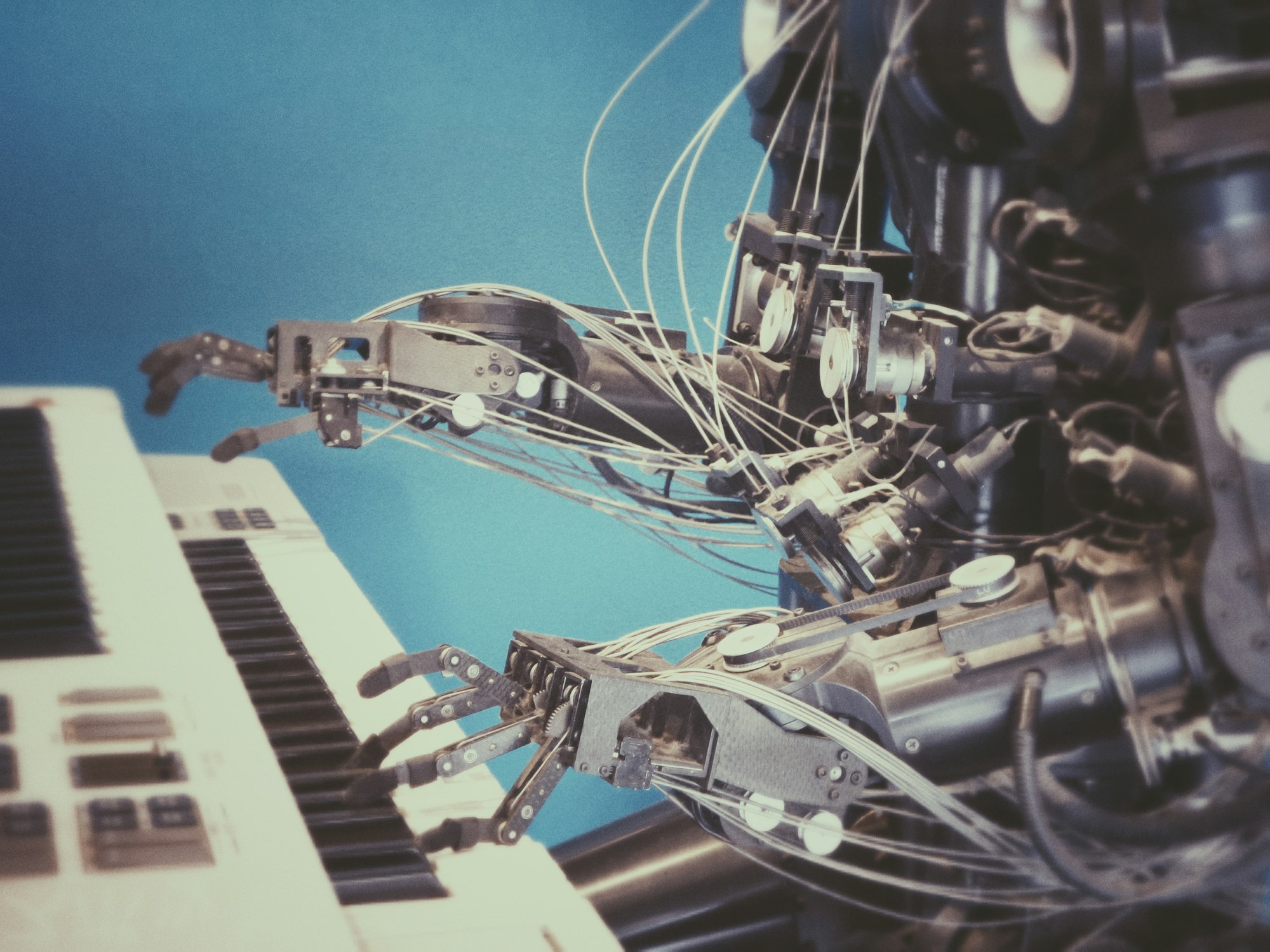

Artificial Intelligence - harmful or helpful?

Artificial Intelligence - Reading Assignment - AI course @ ZHAW Blog article @ semester and course beginning.

AI Course Article - Reading Assignment

I'm currently studying @ ZHAW (Bachelor's degree Computer Science) and I’m taking a class on Artificial Intelligence (AI). One of the Praktika tasks is to write a blog post on the “What’s Wrong with AI? A Discussion Paper” article which constitutes a mandatory reading for the course.

Background

Artificial Intelligence (AI) is a very broad interdisciplinary field with contributions from many distinct technical and philosophical disciplines. It is also a very active and rapidly developing area of research. Hence, a wide body of different approaches and methods exist. Because this cannot be covered by any one single course, we thus bring you in contact with relevant literature right from the start.

Article for reading assignment

The Article we have to read is the following:

- Dirk Helbing, “What’s Wrong with AI? A Discussion Paper”, SI Magazine, 2020.

Task

Read the article above and make up your mind what side of the discussion “AI: helpful or harmful” you would take, and why.

My summary on "AI: helpful or harmful?"

Looking back to our technical and materialistic achievements, we've reached a level were new solutions try to fix huge and complex problems. More clearly, we're looking for very powerful solutions to increasingly complex problems. But with great power comes great responsibility and power can always also be used for doing bad things, maybe not even intentionally. Sadly, naivety and childish thinking counts also to the weaknesses of humanity like megalomania and arrogance and this can heavily affect a wise and productive usage of very powerful solutions.

Artificial Intelligence (AI) should help us to overcome complex problems like never before. If setup and used wisely, it's considered to be a very powerful solution to complex problems.

AI: helpful or harmful?

Harmful or helpful? I'm currently undecided! I honestly think that AI is a tool like so many other things we've invented. Nearly all "Artefacts" can - in some way - be used either harmful or helpful. It depends on the users mind. In reality there is "still" nothing wrong with AI but maybe with some peoples minds. The desired use case is sometimes utterly questionable.

Two Examples

AI used for a potentially harmful use case:

- Deliberate manipulation of the masses (even our children) to maximize profits: https://www.youtube.com/watch?v=C74amJRp730&ab_channel=TED

AI used for a potentially helpful use case (more of those please!):

- Use data and technology to gain more insights and use this for new views on how to fight diseases: https://www.theverge.com/2014/9/3/6102377/google-calico-cure-death-1-5-billion-research-abbvie

We're not there yet!

I also think that a lot more ground work needs to be done and that we don't yet understand enough on how our brains work and what intelligence really is. And i don't think that dead matter can start to think or even develop a consciousness like so many believe. There are just too many things missing which can not be simulated using only bits and bytes. I believe that only a kind of "living" organism can develop true intelligence and consciousness and i don't think this will happen accidentally when "stitching" everything together.

In my view, to develop an intelligence you need to have certain requirements which are probably never given in a machine or computer as we know them today. I wrote some down in the following list:

- Entangled e.g. hugely adapted to the environment

- Organic material for tuning / connecting into our universe called reality

- Self awareness (knowing who you are in the world you live in) & consciousness (or maybe it's the other way around? intelligence generates consciousness?)

- Feelings and emotions and believes

- Motivation

- Imagination

- Knowledge

"The true sign of intelligence is not knowledge but imagination." - Albert Einstein

I can also imagine that dualism and symmetry (following the golden ratio) can play a big role.

Partial imitation of brain characteristics?

We certainly can use different characteristic from intelligence - or on how we think our brain works in a technical manner - and try to copy them as close as possible to a computer program or even build special hardware for it. This is actually exactly what i think we are doing with "AI". There are now many separate fields and directions in which people are doing research. For example machine learning or pattern recognition or language processing. This are only "parts" of an intelligence in my view.

Unclear impact on our society

Using AI on a broader base in many companies and web applications or even in governments can lead humanity to an unknown future. What if we got it wrong? Is AI doing what it is intended for? Or is it already too complex in certain use cases that the output needs years to be evaluated as plausible or wrong?

Our AI solutions need to be tested in many ways to make sure the output is reliable and plausible. If we have no experience or possibility to control the outputted data, do we blindly follow it and tell everyone it's the truth?

Known possible side effects of AI

- Slight change of input parameters can lead to a totally different result

- (Wrong) Training data or incomplete data does heavily influence the outcome. Blinders effect e.g. a one sided view?

- So-called resonance effect—suggestions that are sufficiently customized to each individual. In this way, local trends are gradually reinforced by repetition, leading all the way to the "filter bubble" or "echo chamber effect": in the end, all you might get is your own opinions reflected back at you.

If it comes to decisions about a human or humanity or the society, ethical rules and regulations are needed on how we use AI and what can AI decide and what not.

Informational self-determination? Sovereignty over our personal data

Another important topic we need to clarify is about our personal data. Informational self-determination is something we need to implement in our society. To enforce companies to follow the rules on informational self-determination we should make it a global law.

At the moment, all our data doesn't belong to us and are even soled to the highest bidder. We don't really have control over our personal data and how we've already have been judged by an AI system. We don't even know which company has created a profile for us and uses a score of some kind to categorize how we behave.

Robotic and automation revolution will make billions of people unemployed?

Lot's of people fear that this steadily progressing automation revolution will make billions unemployed. But we already had such kind of revolutions like the industrial revolution. History learned us that there will be new jobs coming up. All machines need a maintainer, someone who takes care or fix and build them. New jobs could pop up for many different topics we can't even think of yet.

We will probably move away from physical work even more and shift towards orchestrating, analytical, psychological, scientific, cultural or data driven jobs. And we should also keep in mind that this transition will not take place over night and that not all countries have the same pace and possibilities.

Fear of Singularity, is AI a threat to humanity?

What if we create humanity's darkest nightmare with AI? How would a Super Intelligence behave against us?

“Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded.” - Stephen Hawking.

“We should be very careful about artificial intelligence. If I had to guess at what our biggest existential threat is, it’s probably that.” - Elon Musk

“(I am) in the camp that is concerned about super intelligence” - Bill Gates

Reading the quotes above just triggered a question in me: Do we need some kind of kill switch? But a Super Intelligence would probably find out about this and simple remove the kill switch. And since ever, some people always see a threat in all kinds of new inventions. I personally have the feeling that we're not yet there to fear such a scenario, but maybe in 1 or 2 decades? Only the future can tell.

Too much of a hype! Where is the modesty?

AI has ever since been hyped and many experts voiced wild speculations and magical potential for what can be done with it. We now even have apocalyptical scenarios for the whole of humanity. Wow! But I miss modesty and honesty. We have come far but it's still a long way to go and we're not that good as we always tend to think. Just because some of our algorithms have characteristics of an AI, it doesn't mean that we actually have an AI.

Nevertheless I think we made good progress in many fields and i'm excited about the possibilities and potential of all topics related to AI: Machine learning (supervised, reinforcement, and unsupervised learning), natural language processing, deep learning, neural networks, neuromorphic computing, bioinformatics, robotics, biomechanics, computer vision (image/video processing), IoT, autonomous driving, ... etc. to name few.

I'm really eager to learn more about some of these topics mentioned above. Currently i'm in the beginning of the AI course for this semester @ ZHAW and i've to write another blog post as a reply to this one at the end. Let's see if and how i'll change my mind!

One more thing ...

Where is the feminin view of AI?

How would a team of female AI pioneers think and tackle solution? I really miss the female view in many scientific topics. AI or computer science is just one of them. If nearly only masculine thinking is involved, it probably ends like the currently "preferred" solution for flying to space: Get a group of volunteers and put them on top of a phallus, filled with explosives and blow them into space!

Wouldn't women come up with a totally different solution?! Maybe even a solution that defies gravity instead of literally blowing things up in the air?

Links

If you want to learn a little bit more about the AI course @ ZHAW: https://stdm.github.io/ai-course/

Below here are just my notes i took while reading the article mentioned on top of the article:

My Article Notes

What is interesting to me

- Slight change of input parameters can lead to a totally different result

- (Wrong) Training data or incomplete data does heavily influence the outcome. Blinders effect e.g a one sided view?

- Side effects like: so-called resonance effect—suggestions that are sufficiently customized to each individual. In this way, local trends are gradually reinforced by repetition, leading all the way to the "filter bubble" or "echo chamber effect": in the end, all you might get is your own opinions reflected back at you.

- Ethical rules and regulations needed

- Too much of a hype. More modesty needed?

- Will we able to understand what we've built and how and why the AI has decided in a certain way?

Seems relevant for the future

- How to control and steer AI? Kill switch?

- What kind of future will it be. Robotic and automation revolution will make billions of people unemployed?

- Is a decision by an "algorithm" over a human life something we could accept?

- Data belongs to the user: Informational self-determination

- AI Religion?

- Experts have different views and expectations for the future of AI.

- More basic footwork needs to be done. Maybe even back to the drawing board in some cases?

- Important is to figure out what AI can do and what not. Is a rational decision really the "right" decision when it comes to living or dying? Are we humans becoming things in the "artificial" mind of an AI? Do we want this?

Is not understandable at the moment

- Is it really AI? created from "dead" matter? What is the "A" and the "I" really?

- Where does this lead us?

- Possibilities? Upgradable body parts to stay competitive? Can we change our life span by replacing real organs with technical ones?

- Real risks? e.g. Technological Singularity? What will happen with humanity?

- How will our society change?